Difference between revisions of "UDPTX"

(→Configuration) |

(→Ixy) |

||

| Line 127: | Line 127: | ||

* Single thread | * Single thread | ||

* Software CRC only | * Software CRC only | ||

| + | |||

| + | === Performance === | ||

| + | |||

| + | Passed performance tests to transmit to 10K connections | ||

=== Configuration === | === Configuration === | ||

Revision as of 13:46, 13 September 2020

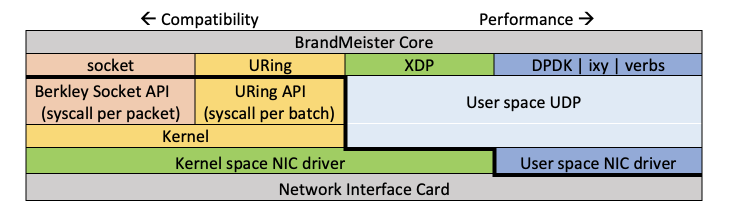

UDPTX is BrandMeister-own UDP communication library, used to transmit and receive UDP traffic fast. It is very important for BrandMeister to spend less time to send and receive packets, it makes transmission (and finally sound) more smooth.

UDP Transmitter

At this moment BrandMeister provides several backends (options) to send outgoing UDP:

- socket

- raw

- AF_XDP

- DPDK

- Ixy

socket

This is standard default backend that uses Berkley sockets for sending a traffic. It tries to send the data in non-blocking mode and has special transmission thread to re-send failed packets.

You have to use it if you have

- non-ethernet interfaces

- more than one interface for outgoing traffic (such as public + AMPR, or one for IPv4 and another one for IPv6)

- huge routing tables

Performance

Passed performance tests to transmit to 5K connections

Configuration

transmitter = "socket";

raw

This is fast forwarding backend that uses RAW (PACKET_MMAP) socket of Ethernet interface for sending a traffic. It allows to save up to 50% CPU time and has great compatibility.

Limitations

- Requires to use a single ethernet interface for BrandMeister's traffic (IPv4 and IPv6, local site connectivity will not work)

- All traffic will be routed via default gateway (except loopback, see next bullet)

- Loopback addresses (127.0.0.1 and ::1) are handled by using Berkley sockets

Performance

Passed performance tests to transmit to 20K connections

Configuration

transmitter = "raw:<interface name>";

transmitter = "raw:eth0";

AF_XDP

This is faster forwarding backend that uses AF_XDP socket of Ethernet interface for sending a traffic and in most cases communicates directly with Linux network interface driver.

Limitations

- The same set of limitations as raw

- Requires Linux kernel >= 4.18

- Interface have to be configured to use the same count of TX and RX queues (please read man ethtool)

- May have compatibility problems (NIC may have no support of XDP)

Performance

Passed performance tests to transmit to 30K connections

Configuration

transmitter = "xdp:<interface name>";

transmitter = "xdp:eth0";

DPDK

This is fastest forwarding backend that uses kernel-bypass NIC driver for sending a traffic. It allows to save much more CPU time due to direct poll communications to the NIC and CRC offload features of some NIC models. In some tests we got up to 75% acceleration. List of supported NIC models can be found here.

Limitations

- The same set of limitations as raw

- You have to have separate NIC or virtual detachable NIC port allowed to use for the DPDK transmission

- Only DPDK port #0 will be used

- We added support of dpdk-proc-info and dpdk-pdump

- Very hard in configuration and performance tuning!

Performance

Passed performance tests to transmit to 40K connections

Configuration

transmitter = "Modules/DPDK-edge.so:<reference interface> <EAL parameters> [--] <module parameters>";

transmitter = "Modules/DPDK-edge.so:eth0 -w 0000:af:00.0 --file-prefix bm --lcores '(0-64)@0' -- -c 1 -q 2048 -b 1 -l 4096";

- Reference interface is a kernel attached interface used for normal communications (please read about raw mode). DPDK will reuse its IPs and default gateway

- For EAL parameters please read this documentation

- It will use so many NIC queues as defined amount of slave logical cores minus one and multiplied by core ratio (so ratio means how may queues each core should handle)

- The best performance on NUMA machines could be reached by using the same CPU as NIC connected by pinning logical cores via EAL's parameter lcores and BrandMeister's parameter affinity

- Also in most cases it requires to run BrandMeister with root privileges, you can do this by overriding systemd configuration (beandmeister@.service.d):

- # /etc/systemd/system/brandmeister@.service.d/override.conf

- [Service]

- User=root

Module parameters

All these parameters are optional and override default settings of PMD or DPDK module

- (-c) --core-ratio <n> - ratio between NIC queues and DPDK cores

- (-q) --queue-size <n> - set PMD queue size to <n> slots (instead of automatically generated)

- (-b) --batch-size <n> - set maximum batch size to <n> slots (instead of automatically generated)

- (-l) --buffer-length <n> - set workers buffer length to <n> slots (instead of default value of 2048)

- (-p) --pthresh <n> |

- (-h) --hthresh <n> | PMD specific threshold values:

- (-w) --wthresh <n> | https://doc.dpdk.org/guides/prog_guide/poll_mode_drv.html#configuration-of-transmit-queues

- (-r) --rs-thresh <n> |

- (-f) --free-thresh <n> |

- (-s) --software-crc - force software CRC calculation

Ixy

Ixy is very experimental and light user-space network driver. At this moment it supports Intel 82599ES family (aka Intel X520) and virtio. Please read Ixy documentation.

Limitations

- Similar to DPDK but experimental and buggly

- Single thread

- Software CRC only

Performance

Passed performance tests to transmit to 10K connections

Configuration

transmitter = "Modules/Dixie.so:[reference interface] <PCI address> <MAC address> [<queue-count> [<buffer-length> <batch-length>]]";

transmitter = "Modules/Dixie.so:eth0 0000:af:00.0 00:1b:21:a5:a0:2c 1 128 32";'

Ixy doesn't provide method to resolve MAC address directly from NIC, so address has to be defined in configuration. We found Ixy has a bug with queue count > 1 on ixgbe, so we do not recommend to use multiple queue.

UDP Receiver

In reception part UDPTX's driver works in parallel with socket receiver. All it does, is accelerate reception of UDP packets on particular interface.

eBPF + AF_XDP

This is modern method to accelerate UDP reception in BrandMeister Core. It allows to save up to 30% CPU time.

Limitations

- Requires Linux kernel >= 4.18

- Interface have to be configured to use the same count of TX and RX queues (please read man ethtool)

- eBPF handles traffic before iptables

- Works with only a single instance of BrandMeister Core on the single machine

Configuration

receiver = "Modules/ExpressFilter.o:<interface name>";

receiver = "Modules/ExpressFilter.o:eth0";

eBPF + AF_XDP + XDPHelper

This is method is fully the same as eBPF + AF_XDP but uses small additional daemon XDPHelper to load and share eBPF program between several BrandMeister Core instances. XDPHelper is supplied with BrandMeister Core and starts automatically only when required (thanks to systemd and D-BUS activation). By default XDPHelper uses eBPF program ExpressFilter.o (see xdphelper.service).

Limitations

- The same list of limitations as in case of eBPF + AF_XDP except support of multiple instances

- All instances of BrandMeister Core should use the same network interface

Configuration

receiver = "xdp:<interface name>";

receiver = "xdp:eth0";

BrandMeister

BrandMeister